September 12, 2023

By Patricia Waldron

One way that scientists train robots and artificial intelligence (AI) models to perform tasks – think self-driving cars – is by feeding them a perfect demonstration of what to do and asking them to copy it. This process, called imitation learning, is slow and expensive, and the resulting systems often can’t handle more complex real-world scenarios.

Instead, what if researchers could provide lots of imperfect demonstrations and have the system piece together a better approach? This strategy, called superhuman imitation learning, is the focus of a new project co-led by Sanjiban Choudhury, assistant professor of computer science in the Cornell Ann S. Bowers College of Computing and Information Science, along with Brian Ziebart and Xinhua Zhang of the University of Illinois at Chicago. They have received a nearly $1.2M grant from the National Science Foundation to support this work for three years.

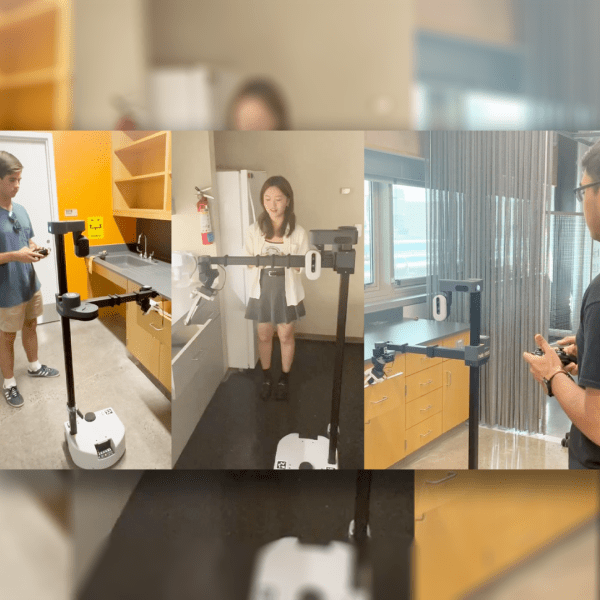

Choudhury, who heads the People and Robot Teaching and Learning (PoRTaL) group, will use this approach to train robots that assist people at home so robots can one day safely and efficiently perform tasks, like fetching a can of soup from the pantry and heating it up on the stove.

To test out this idea, Choudhury will have multiple users manipulate the robot to perform a series of tasks, like opening a drawer. Some will guide the robot well, but others will make mistakes. Then his group will develop an algorithm that, instead of blindly copying the demonstrations, tries to outperform them on a number of objectives — like not opening the drawer too slowly, or with too much force.

“We would like to see if the robot can still learn a behavior, even from these imperfect demonstrations, and do the task very, very well,” Choudhury said. He expects that, by learning from multiple teachers, the diverse training will make the robots more efficient and adaptable.

Ziebart’s group will explore the theoretical limits of this approach and benchmark how well the algorithm performs by applying it to open-source data from people playing old-school Atari games, like Pong and Breakout. If the algorithm can train AI to surpass human high scores, they’ll know it is successfully taking in the best parts of the demonstrations and ignoring the mistakes.

In an entirely different application, Zhang will see if superhuman imitation learning will enable an AI system to pick the best treatment options for patients with head and neck cancers.

The inspiration for this project came when Choudhury and Ziebart worked together at Aurora, an autonomous driving technology company that uses imitation learning to teach self-driving cars. Cleaning the data to provide perfect demonstrations was a major challenge that slowed down the process. “We need better algorithms than what we have today to deal with this bottleneck,” Choudhury said.

If successful, the approach will have diverse applications in robotics and many AI systems, and could even be used to ensure that large language models, like ChatGPT, provide accurate information.

Choudhury will soon be recruiting interested community members to visit the PoRTaL lab and direct their two robots as they pull around a cart, pick up objects, clean a table and open drawers. Volunteers will have the opportunity to show the robots how it’s done – even if it’s done imperfectly.

Patricia Waldron is a writer for the Cornell Ann S. Bowers College of Computing and Information Science.