The world of image recognition software and artificial intelligence is advancing rapidly but how reliable is it really?

Research by Cornell Computing and Information Science Ph.D. student Jason Yosinski found that computers, like humans, can be fooled by optical illusions. This could pose real problems for applications like facial recognition security systems.

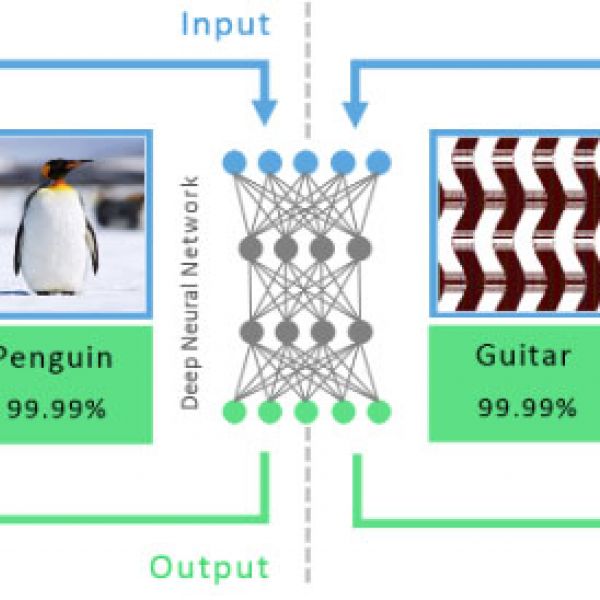

Computers can be trained to recognize images by being shown millions of photos of objects and being told the name of the particular object in each photo. Yosinski and the study team used an image recognition algorithm system called deep neural network (DNN).

“Many systems on the web are using ‘deep learning’ to analyze and draw inferences from large sets of data,” said Dr. Fred Schneider, Chair of the Computer Science Department at Cornell University. “For example, DNN might be used by a web advertiser to decide what ad to show you on Facebook or by an intelligence agency to decide if a particular activity is suspicious based on a pattern of activity. Deep learning is seen as a promising and exciting approach to solve a diverse set of problems.”

In this study, DNN was paired with a second genetic algorithm that created white noise and patterns. Starting with a random image the researchers slowly mutated the images, checking each to make sure the neural network still thought it was the same object. Both the copy and the original were shown to a DNN. If the copy was recognized in the algorithm’s repertoire with more certainty than the original, the researchers would continue to mutate it step-by-step. Eventually this produced dozens of images that were recognized by the DNN with over 99 percent confidence but were not recognizable to human vision. This shows that computer vision and human vision have significantly difference processes with far-reaching implications in the security and AI realms.

"We think our results are important for two reasons," said Yosinski. "First, they highlight the extent to which computer vision systems based on modern supervised machine learning may be fooled, which has security implications in many areas. Second, of more practical use to machine learning researchers, the methods used in the paper provide an important debugging tool to discover exactly which artifacts the networks are learning."