By Tom Fleischman for the Cornell Chronicle

Cornell Tech researchers have developed a mixed-reality (XR) driving simulator system that could lower the cost of testing vehicle systems and interfaces, such as the turn signal and dashboard.

Through the use of a publicly available headset, virtual objects and events are superimposed into the view of participants driving unmodified vehicles in low-speed test areas, to produce accurate and repeatable data collection about behavioral responses in real-world driving tasks.

Doctoral student David Goedicke is lead author of “XR-OOM: MiXed Reality Driving Simulation With Real Cars For Research and Design,” which he will present at the Association for Computing Machinery’s CHI 2022 conference, April 30-May 5 in New Orleans.

The senior author is Wendy Ju, associate professor of information science at the Jacobs Technion-Cornell Institute at Cornell Tech and the Technion, and a member of the information science field at Cornell. Hiroshi Yasuda, human-machine interaction researcher at Toyota Research Institute, also contributed to the study.

This work is an offshoot of research Ju’s lab conducted in 2018, which resulted in VR-OOM, a virtual-reality on-road driving simulation program. This current work takes that a step farther, Goedicke said, by combining video of the real world – known as “video pass-through” – in real time, with virtual objects.

“What you’re trying to do is create scenarios,” he said. “You want to feel like you’re driving in a car, and the developer wants full control over the scenarios you want to show to a participant. Ultimately, you want to use as much from the real world as you can.”

This system was built using the Varjo XR-1 Mixed Reality headset, along with the Unity simulation environment, which previous researchers demonstrated could be usable for driving simulation in a moving vehicle. XR-OOM integrates and validates these into a usable driving simulation system that incorporates real-world variables, in real time.

“One of the issues with traditional simulation testing is that they really only consider the scenarios and situations that the designers thought of,” Ju said, “so a lot of the important things that happen in the real world don’t get captured as part of those experiments. (XR-OOM) increases the ecological validity of our studies, to be able to understand how people are going to behave under really specific circumstances.”

One challenge with XR versus VR is the faithful rendering of the outside world, Goedicke said. In mixed reality, what’s on the video screen needs to precisely match the outside world.

“In VR, you can trick the brain really easily,” he said. “If the horizon doesn’t quite match up, for instance, it’s not a big problem. Or if you’re making a 90-degree turn but it was actually more like 80 degrees, your brain doesn’t care all that much. But if you try to do this with mixed reality, where you’re incorporating actual elements of the real world, it doesn’t work at all.”

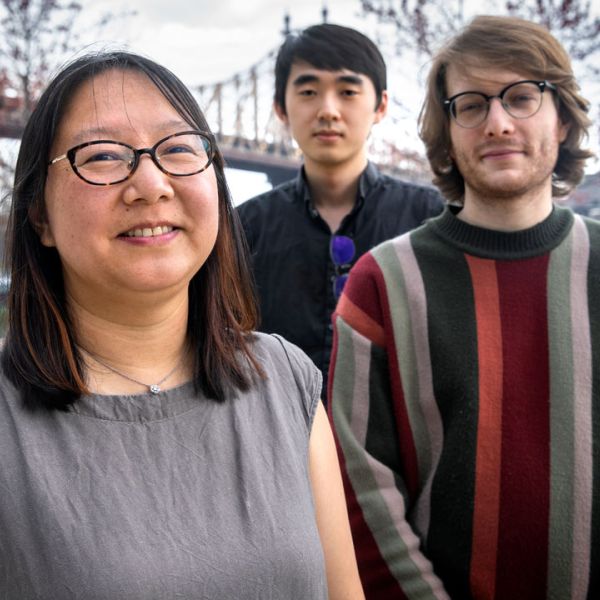

Doctoral student David Goedicke sits behind the wheel of the Fiat virtual simulation vehicle, inside the Tata Center at Cornell Tech.

To test the validity of their method, the researchers designed an experiment with three conditions: No headset (Condition A); headset with video pass-through only (Condition B); and headset with video pass-through and virtual objects (Condition C).

The participants were asked to perform several stationary tasks, including starting the vehicle, adjusting seat and mirrors, fastening safety belt, and verbally describing which dashboard lights are visible. Participants were also asked to perform several low-speed driving tasks, including left and right turns, slalom navigation and stopping at a line. The drivers in conditions A and B had to navigate around actual physical cones, placed 8 feet apart; those in Condition C saw superimposed cones in their headsets.

Most participants successfully completed all cockpit tasks, with most of the failed attempts attributable to unfamiliarity with the vehicle. Most also were successful in the driving tasks, with slalom navigation being most difficult for all, regardless of condition.

This success validates the potential, Ju said, of this technology as a low-cost alternative to elaborate facilities for the testing of certain onboard vehicle technologies.

“This kind of high-resolution, mixed-reality headset is becoming a lot more widely available, so now we’re thinking about how to use it for driving experiments,” Ju said. “More people will be able to take advantage of these things that will be commercially available and inexpensive really soon.”

Other co-authors included Sam Lee, M.S. ’21; and doctoral students Alexandra Bremers and Fanjun Bu.

This research was supported by the Toyota Research Institute.

This article originally appeared in the Cornell Chronicle