When Isay Katsman ’20 met other researchers at the recent International Conference on Computer Vision, he let them assume he was a graduate student.

“It was just easier,” said Katsman, one of four undergraduate co-authors of “Enhancing Adversarial Example Transferability With an Intermediate Level Attack,” presented at the conference, held Oct. 27 to Nov. 2 in Seoul, South Korea. “You sort of want to focus on the material more than the other stuff.”

It was distracting, he said, that their fellow attendees were so surprised to learn a paper written by undergrads was among the 1,077 accepted by the prestigious conference, which received 4,303 submissions.

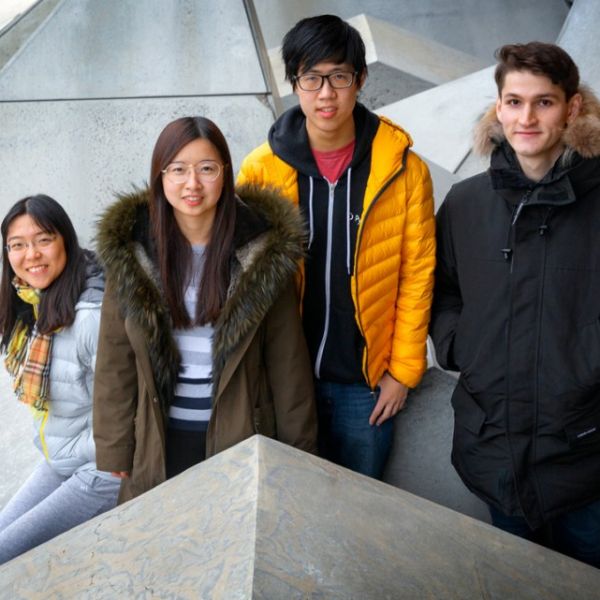

Their success was an honor but not a fluke: Katsman and co-first authors Horace He ’20, Qian Huang ’21 and Zeqi Gu ’21 are all members of the year-old Cornell University Vision and Learning Club, which aims to publish machine learning research at major conferences. They were aided by equipment donations from Facebook as part of its collaboration with Cornell Tech, making it possible for the students to run the algorithms they needed.

“It’s really unheard of for undergraduates to have these kinds of resources,” said Serge Belongie, professor of computer science at Cornell Tech, whose lab funds the students’ club. Belongie is working with Facebook on a project to combat “deepfakes” – faked audio and video created using artificial intelligence – and around $10,000 from that grant was earmarked for the undergraduate research group.

The club used the money to purchase graphics processing units – costly, powerful processors that are necessary for training most machine learning models, and generally available only to graduate students and faculty. Using central processing units – which is how most computers function – the thousands or millions of iterations needed to train machine learning algorithms could take weeks or months.

“If you have a good idea but you can’t verify it because it’s going to take a year to do the computation, then you can’t really do the research,” Huang said. “So now we have the tools we need to do the work.”

Facebook has since donated another $30,000 to the club, which will pay for eight more graphics processing units, the students said.

In their paper, the students tackled the problem of adversarial examples – tiny tweaks to an image that are undetectable to the human eye but completely confusing to a neural network tasked with classifying images. Adversarial examples created by hackers or others with malicious intent could potentially disorient autonomous cars, for example, or subvert image recognition.

The students considered what’s known as transferability – the ability of adversarial examples for one model to fool another model. They proposed and explained a new method for enhancing the transferability of adversarial examples – shedding light on how this phenomenon works in order to more effectively combat it.

“Hopefully this is a step toward better understanding the vulnerabilities of existing machine learning models in a setting that’s very relevant to a real-world attack,” Katsman said. “We hope this will eventually help the community create robust models that are impermeable to these attacks.”

Fine-tuning the adversarial example according to a certain neural network model layer made it far more likely to transfer to other models, the students found; but when they tried to explain their method, they discovered it worked for the opposite reason they’d initially supposed. This reversal gave the students new insight into the rigor of the research process, they said.

“Our initial intuition about how it worked shifted 180 degrees,” Huang said. “That was the most surprising part.”

Huang, He and Gu are math and computer science majors in the College of Arts and Sciences; Katsman is a math and computer science major in the College of Engineering.

In addition to their conference presentation, the students attended a poster session where they discussed their work one-on-one with other researchers. “People were coming up to us and trying to understand our work, and we were explaining it to them,” Gu said. “So that makes you feel pretty good, that so many people are wanting to know about our research.”

The paper is co-authored by Belongie and Ser-Nam Lim of Facebook. Kavita Bala, professor and chair of computer science, and Bharath Hariharan, assistant professor of computer science, are also affiliated faculty with the students’ club, which is sponsored by Facebook AI.

--by Melanie Lefkowicz for the Cornell Chronicle